Traditional platforms treat data as a static resource which no longer works for today's data demands. Learn more about the importance of monitoring BOTH data at rest and data in motion.

Data in Motion Series, Part 1: What is Data in Motion?

This article is the first of three in our “Data in Motion” series.

Data in motion (sometimes referred to as “data in flight”) refers to the digital information actively flowing between systems and locations. Unlike static data resting in databases, data in motion is constantly on the move, whether it’s being transmitted, processed, updated, or otherwise manipulated.

Traditional systems treat data as a static resource to be stored and analyzed in batches. That no longer works; today's data demands a more dynamic approach. In modern businesses, data is constantly flowing and treating data as always at rest results in businesses experiencing severe data management pitfalls.

Three States of Data

Data resides in three states of existence. It’s either at rest, in motion, or at use:

Data at rest refers to information stored in databases, data warehouses, or file systems, waiting to be processed or analyzed. Think annual financial reports or archived customer records.

Data in motion refers to information actively flowing between systems, applications, or storage locations. Examples include live transaction streams, real-time sensor readings, or continuous customer interaction data.

Data in use refers to information actively being processed, analyzed, or transformed by applications. Think real-time fraud detection algorithms processing transaction streams, or recommendation engines analyzing user behavior.

The Technical Foundations of Data in Motion

Modern data-in-motion architectures rest on three essential technical capabilities that work together to enable real-time data processing at scale.

Stream processing

Stream processing systems form the backbone of data-in-motion architectures. Unlike traditional batch systems that process data in fixed chunks, platforms like Apache Kafka and Apache Flink handle data continuously as it arrives. They maintain awareness of patterns and relationships between events, apply real-time analytics, and ensure accurate processing even when data arrives out of order. These systems effectively serve as the central nervous system for data in motion, coordinating the flow and processing of information.

Event-driven architecture

Event-driven architecture represents a fundamental shift in how applications interact with data. Rather than periodically querying databases for updates, applications in an event-driven system react immediately to changes as they occur. Think of it like a newsroom where reporters don't have to keep checking for stories — instead, stories find them the moment they break. This architectural approach enables systems to respond instantly to new information, whether it's a fraudulent transaction that needs to be blocked or a customer interaction that requires immediate attention.

Scalable infrastructure

Supporting this continuous flow of data requires infrastructure built for elasticity and resilience. Operational observability helps keep data in check and monitors for anomalies. Like a city's water system that must handle both typical daily usage and sudden surges, data-in-motion infrastructure automatically scales to match demand. It's distributed across multiple locations to prevent single points of failure, contains sophisticated monitoring systems to detect issues before they impact operations, and leverages cloud technologies to dynamically allocate resources where they're needed most.

Real-World Examples of Data in Motion, by Sector

Different sectors share some common through-lines when it comes to data in motion. They rely on continuous flow rather than batch processing, with multiple, interconnected systems. Data in motion impacts real-time decision-making and has a direct impact on the user or customer. Let’s take a look:

Financial Services - Managing Risks

Modern banking operations rely on continuous data flows to serve customers and manage risk. For example, real-time fraud detection systems analyze transaction patterns as they occur. Banking systems need to manage sensitive customer data in motion, as in this case with Lendly and also this F250 financial services company. Trading platforms process market data streams to execute automated trades with down-to-the-minute data, and credit card authorization systems validate purchases in milliseconds.

E-commerce - Instant Expectations

Data in motion now touches several different aspects of the retail process. Inventory systems synchronize stock levels across physical and digital stores, while dynamic pricing engines adjust prices based on real-time demand. Recommendation systems work by processing customer browsing patterns in real-time.

Transportation and Logistics - Reliance on Constant Flow

The movement of goods and people generates continuous data streams. While fleet management systems track vehicle locations and performance metrics, traffic management systems process sensor data to optimize flow (a great example of this would be TQL). We all have experience with package tracking systems that can now update delivery statuses in real-time. And ride-sharing platforms match drivers and passengers based on location data, and supply and demand.

Healthcare - Mission Critical Data in Motion

Modern healthcare delivery depends on immediate access to patient data. Patient monitoring systems track vital signs in real-time, while pharmacy systems validate prescriptions and drug interactions. Emergency response systems coordinate ambulance dispatch. Telemedicine platforms stream patient-doctor consultations.

Retail - From Static to Streaming

Consider a modern retail business. Every second, new data streams in from multiple sources: point-of-sale systems, warehouse sensors to track inventory levels, website analytics, and supply chain systems. All of this data is in continuous motion.

Traditional batch processing would collect this data periodically — perhaps nightly or weekly—before processing it for insights. But today, waiting hours or days to react to changing conditions isn’t sufficient-t’s a competitive disadvantage. Capturing data in motion (often through data pipeline automation and other related strategies) solves that problem.

The Greater Business Impact of Data in Motion

Organizations leveraging continuous data flows are discovering entirely new ways to compete and grow.

Predictive maintenance represents one of the most impactful applications. By analyzing equipment data streams in real-time, organizations can anticipate failures weeks in advance, scheduling maintenance during planned downtime rather than rushing to repair unexpected breakdowns.

Market responsiveness has evolved into a real-time discipline. Companies can now detect and respond to changing market conditions as they emerge, rather than after they've peaked. Investment firms leverage sentiment analysis on social media streams to adjust positions before market movements occur. Energy companies balance grid loads by predicting demand spikes minutes before they happen.

Continuous experimentation through reliable data in motion has become a strategic advantage. According to the 2024 State of Data Observability report, 92% of leaders consider data reliability core to their data strategy. Organizations can now test business hypotheses in real-time, measuring impact immediately rather than waiting for batch analysis. Product teams can trial multiple features simultaneously, measuring user response instantly and scaling successful innovations while quickly retiring failed experiments. This rapid learning cycle has compressed innovation timelines from months to days.

The risk landscape has also transformed. Rather than discovering security breaches or compliance violations after the fact, organizations can now detect anomalies as they occur. This capability has shifted cyber defense from post-breach investigation to active threat prevention.

The Future is in Motion

From Southwest's $800 million loss to TQL's dramatic reporting optimization, preventing major failures requires catching issues while data is moving. Basic monitoring tools can identify problems after they occur. But a more sophisticated approach is required for the end-to-end visibility and predictive capabilities needed to prevent costly disruptions. Organizations that treat data as a dynamic, flowing resource rather than a static asset gain the agility to compete in rapidly changing markets.

As we look ahead, the importance of data in motion will only grow. The proliferation of IoT devices, the rise of edge computing, and increasing customer expectations for real-time experiences all point to a future where success depends on managing continuous data flows. The technical foundations we've discussed—stream processing, event-driven architectures, and scalable infrastructure—provide the building blocks for this future.

The transformation is sure to bring new challenges in the field of governance, data management, and data quality. In our next piece, we’ll cover how to prevent business disruption at each stage of the data lifecycle. We’ll also go through success stories from Netflix, Target, and others to demonstrate how true operational efficiency happens through end-to-end pipeline monitoring.

Read Data in Motion Part 2 here

Read Data in Motion Part 3 here

Keep Reading

January 21, 2026

2026 Predictions for Data Leaders: Where Accountability Moves Next2026 predictions for data leaders on where accountability shifts next, from AI data pipelines and data quality risk to stack consolidation and audited data products.

Read More.png)

December 9, 2025

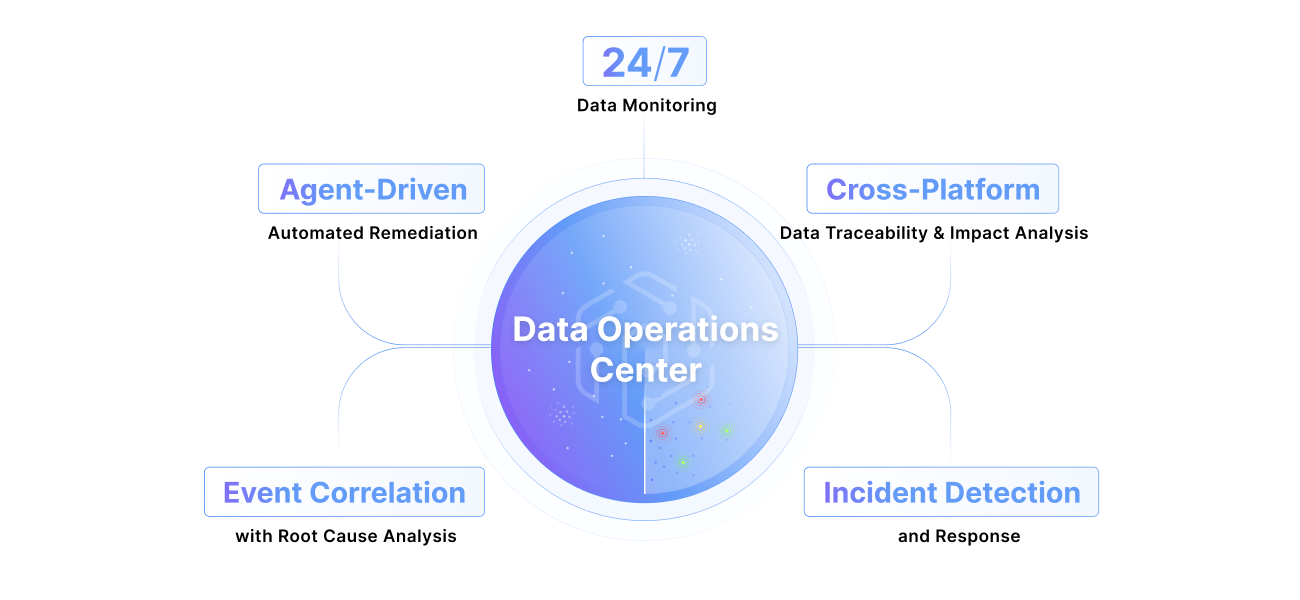

From NOC and SOC to DOC: The New Standard for Enterprise Data OperationsThe DOC isn’t a “nice-to-have,” it’s the next frontier—and it’s here. Read about the evolution from NOC to SOC to DOC, three functions enterprises cannot go without.

Read More.png)

October 28, 2025

Pantomath Achieves ISO/IEC 27001:2022 CertificationPantomath announced today that it has achieved ISO/IEC 27001:2022 certification for its Information Security Management System (ISMS).

Read More