Here’s the uncomfortable truth: the way most organizations manage data incidents in ServiceNow is broken. Pantomath's Somesh Saxena gives his thoughts.

SLA Accuracy in ServiceNow: Are You Tracking the Signal or the Noise?

Here’s the uncomfortable truth: the way most organizations manage data incidents in ServiceNow is broken. In the pursuit of SLA compliance, too many enterprises are lulled into a false sense of operational health – while their data pipelines quietly bleed accuracy, efficiency, and trust. The culprit isn’t ServiceNow, but rather the disconnected workflows that plague even the most sophisticated ITSM deployments.

The Current State of Incident Management

Large organizations have established SLAs between IT/data support teams and their stakeholders (data consumers). Metrics like Mean Time to Acknowledge (MTTA), Mean Time to Detect (MTTD), and Mean Time to Resolve (MTTR) are standard benchmarks with defined SLAs tracked within ServiceNow, the market-leading ITSM platform.

A typical scenario looks like this: an enterprise data support team might have an SLA of 4 hours for MTTA. That means within 4 hours, they must acknowledge an issue and track it in ServiceNow to align with ITIL practices. Then, they might have an 8-hour SLA for MTTD, requiring them to identify the root cause within that time frame. On paper, this looks like operational discipline. Yet, for all the apparent rigor, most enterprises are unwittingly undermining their own SLAs through disconnected tooling and a lack of data observability.

The Illusion of SLA Compliance: Why Metrics Can Lie

With the industry’s widespread reliance on MTTA, MTTD, and MTTR, we risk turning ServiceNow into a box-ticking exercise. When incidents are logged only after business users stumble across bad data (often hours after the root cause), every metric reported is a mirage. The real damage? SLAs are met on paper, but the business is making decisions based on inaccurate data.

Reality is messy. Imagine a data integration job in Informatica fails at 2:00 AM. It causes missing data to cascade downstream into Snowflake, ultimately corrupting 15 Tableau reports. When business users arrive at 9:00 AM, they’re all staring at flawed dashboards. Each user, unaware of the others, contacts the support team, triggering a flurry of ServiceNow tickets. The team thinks they’re doing everything right by creating these tickets within the SLA window for MTTA.

ServiceNow shows 15 separate incidents, all time stamped between 9:00 AM and 1:00 PM (not at 2:00 AM, when the real failure occurred). Each ticket is triaged, routed, and investigated as if it were a unique problem. There’s a wild goose chase of duplicated efforts and wasted time.

SLI Inaccuracy and Duplication

The above scenario exposes two fundamental flaws in the status quo. They are:

- SLI Inaccuracy: ServiceNow’s incident timestamps reflect when users report issues, not when they actually began. The true impact window – the seven hours between failure and detection – is invisible in the metrics. The SLIs are wrong, and SLA compliance is a facade.

- Ticket Duplication: Instead of one consolidated incident with 15 downstream impact points, there are 15 tickets for a single root cause. This inflates the incident count and distorts reporting. The data team is stuck managing tickets instead of solving dozens of real problems.

Worse, the entire process is reactive. Fixes are reserved for after business users encounter bad data. Manual ticket creation and constant context-switching between ServiceNow and troubleshooting tools add further drag.

To be clear: this isn’t ServiceNow’s fault. The platform is a powerful ITSM solution, but it was never designed to natively monitor the intricate, distributed data pipelines that fuel modern analytics. The real culprit is the fragmented nature of today’s data environments, where monitoring and incident management are disconnected by design.

Deep Observability is the Solution

Pantomath is the platform that was purpose-built for end-to-end data observability, automation, and incident management. By bi-directionally integrating directly with ServiceNow, Pantomath bridges the gap between detection and resolution, transforming how data incidents are managed.

Imagine the same scenario. A critical data pipeline fails at 2:00 AM, but this time, Pantomath’s observability framework is at the helm with automated incident management through Pantomath’s robust ServiceNow integration. The moment the failure occurs, Pantomath detects the anomaly instantly, not hours later when business users stumble on bad reports. Rather than waiting for a flood of support emails and duplicated tickets, Pantomath automatically creates a single, precisely-timestamped incident in ServiceNow. This ticket isn’t just a placeholder; it comes fully loaded with a detailed root cause analysis, a map of all downstream impacts, and all the context engineers need to take action.

Then, instead of forcing teams to manually triage and route each incident, Pantomath intelligently assigns the incident to the right team or owner. The entire incident lifecycle, from detection to resolution, can be managed within Pantomath’s platform, while ServiceNow remains perfectly in sync. There’s no more toggling between systems and no more confusion about where the real work is happening. For data reliability engineers, this means they can stay focused on solving the problem, not fighting the process.

What once was a chaotic scramble of 15 separate tickets, each representing a fragment of the truth, is now a single, unified incident that captures the full scope of the issue.

The Benefits: Accurate, Efficient, and Proactive Data Operations

There are multiple impacts of this approach:

- True SLA Compliance: Incidents are tracked from the moment they occur, not the moment they’re noticed. SLIs and SLAs finally align with reality.

- Duplication is Eliminated: One incident, many impacts. No more ticket bloat or wasted effort.

- Productivity Gains: Engineers spend their time resolving issues, not managing tickets.

- Proactive Detection: Issues are caught before business users are affected, protecting decision quality and business outcomes.

Perhaps most importantly, this proactive, automated approach fundamentally changes the culture of data operations. Data teams move from a reactive stance (scrambling to fix problems after they’ve already hurt the business) to a preventative mindset.

Automated Data Operations for the Win

The future of data operations isn’t manual, reactive, or fragmented. It’s automated, intelligent, and deeply integrated. With Pantomath and platforms like it, organizations can break free from the inefficiency cycle that has plagued data support teams for years.

In this day and age, “automation” can feel like a buzzword. But it’s not; there are real implications for enabling data pipeline automation. Teams to focus on what truly matters: delivering reliable, trustworthy data to the business. There’s no place for misleading SLA metrics that mask the real health of the data landscape.

For years, organizations have prided themselves on tracking SLIs against SLAs, believing that their ServiceNow dashboards offer a true reflection of operational health. But most enterprises are measuring the wrong things at the wrong times. SLIs that miss the true window of impact aren’t actually working.

Pantomath’s deep integration with ServiceNow changes this paradigm. For the first time, organizations can trust that their SLA metrics are truly reflective of operational reality.This is the new standard: automated, integrated, and intelligent. That’s how you win with data.

Keep Reading

January 21, 2026

2026 Predictions for Data Leaders: Where Accountability Moves Next2026 predictions for data leaders on where accountability shifts next, from AI data pipelines and data quality risk to stack consolidation and audited data products.

Read More.png)

December 9, 2025

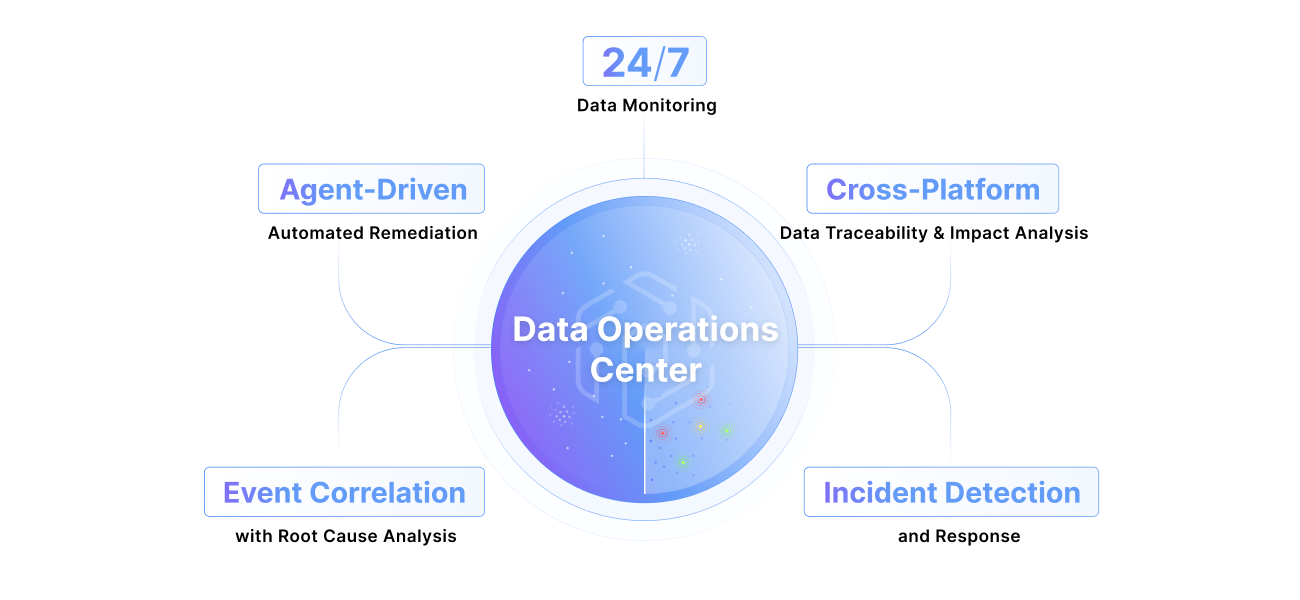

From NOC and SOC to DOC: The New Standard for Enterprise Data OperationsThe DOC isn’t a “nice-to-have,” it’s the next frontier—and it’s here. Read about the evolution from NOC to SOC to DOC, three functions enterprises cannot go without.

Read More.png)

October 28, 2025

Pantomath Achieves ISO/IEC 27001:2022 CertificationPantomath announced today that it has achieved ISO/IEC 27001:2022 certification for its Information Security Management System (ISMS).

Read More