While much attention focuses on data quality issues and pipeline failures, the simple failure of a job to start can trigger catastrophic downstream effects.

Data in Motion Series, Part 3: The Silent Threat of Failed Job Initiations

How a single unstarted job can cascade into system-wide data failures - and how to prevent it

This article is the third of three in our “Data in Motion” series.

The first two parts of this series explored the fundamentals of dynamic data flows and real-time pipeline traceability. This post explores a critical vulnerability lurking in modern data architectures that often escapes detection: failed job initiations.

While much attention focuses on data quality issues and pipeline failures, the simple failure of a job to start can trigger catastrophic downstream effects. These silent failures are particularly dangerous because they don't generate the typical error signals that alert teams to problems. Instead, they create gaps in data motion that may go undetected until business operations are impacted.

In this final piece of our series, we'll examine the technical mechanics of job initiation failures, explore their cascading effects on data in motion, and detail how modern machine learning approaches can transform job monitoring from reactive to predictive. For data engineers and architects, understanding these failure modes is crucial for maintaining reliable data flows in increasingly complex pipeline architectures.

The Technical Anatomy of Job Initiation Failures

Modern data pipelines are far more complex than simple A-to-B data movements. They're intricate networks of interdependent jobs. When a job fails to start, it's often due to subtle interactions between these dependencies rather than obvious system failures.

Pipeline dependencies create an invisible choreography of job scheduling. Consider a typical enterprise data pipeline:

- Source systems generate raw data

- ETL jobs move and transform this data

- Aggregation jobs combine multiple data streams

- Loading jobs populate business intelligence tools

- Reporting jobs generate insights

Each stage depends on the successful completion of upstream tasks. But here's the challenge: these dependencies aren't always explicit. A job might depend on data freshness, resource availability, or even the state of external systems. When any of these implicit dependencies fail, the job may silently fail to initiate.

Failure patterns take many shapes and forms. Here are some of the most common to watch out for.

Resource Allocation Failures

Resource allocation failures occur when necessary computational resources aren't available at job start time. These can be particularly insidious because they often don't register as system failures. The most frequent scenarios involve insufficient memory allocation due to competing workloads, CPU throttling during high-demand periods, storage constraints preventing temporary file creation, and network bandwidth limitations affecting data transfer initialization.

Configuration Drift

Configuration drift represents a gradual divergence between expected and actual system states. It's particularly dangerous because it can develop slowly over time. Environment variables might change between deployments, API endpoints may update without pipeline adjustments, database connection parameters can become outdated, and cloud service configurations might evolve without pipeline updates.

Permission and Authentication Issues

As data moves through different systems, authentication failures can prevent jobs from starting. These often manifest as expired service account credentials, changed access policies, token rotation failures, or cross-system permission mismatches.

Upstream Data Availability Issues

Some of the sneakiest job initiation failures involve upstream data availability problems. Source systems might fail to generate expected data, or partial data delivery could cause validation failures. Time zone misalignments can affect data readiness, or changed data formats might break intake processes. These insidious errors are difficult to catch until after-the-fact.

Why Traditional Monitoring Falls Short

Traditional data observability tools excel at detecting failures in running processes but struggle with jobs that never begin. This blind spot exists for several reasons.

First, when a job doesn't start, there's often no error message to capture. It's the absence of an event rather than the presence of a failure. Second, traditional tools often rely on timestamps of last successful runs. However, this doesn't distinguish between a job that's waiting to start and one that failed to start.

Third, modern pipelines span multiple platforms and tools. Traditional monitoring solutions, focused on individual systems, miss the broader context needed to understand job initiation failures. Lastly, the number of potential failure points grows exponentially with pipeline complexity. According to the 2024 State of Data Observability report, 90% of organizations struggle to quickly identify root causes due to this complexity.

Consider a real example from a Fortune 250 financial institution: their critical reporting pipeline appeared healthy according to traditional monitoring tools, showing all green status indicators. Yet crucial jobs weren't initiated due to subtle permission changes in an upstream system. Only end-users noticed the impact when reports contained stale data.

This highlights why modern data operations require a new approach to monitoring - one that understands the dynamic nature of data in motion and can detect the subtle signals that precede job initiation failures. In the next section, we'll explore how these failures cascade through data pipelines and impact business operations.

Cascade Effects of Failed Jobs

Consider TQL's experience with their hourly sales reporting pipeline. When a critical transformation job failed to start, it wasn't just the immediate report that was affected. The failure created a backlog of unprocessed data that grew with each passing hour. By the time the sales team noticed stale data in their dashboards, the pipeline had accumulated hours of pending transformations, each depending on the previous hour's processed data.

When a job fails to initiate, its impact ripples through the data ecosystem like a stone thrown into a pond. A single point of failure turns into system-wide disruption. Here are some specifics on how these effects propagate through modern data architectures.

Data Freshness Degradation

Data freshness degradation follows a compounding pattern. When a job fails to start, it doesn't just delay the current data - it creates a growing gap between real-world events and their representation in your data systems. At the aforementioned Fortune 250 financial institution, this manifested during the Thanksgiving shopping period when a failed job initiation meant transaction monitoring systems operated with increasingly stale data. Each passing minute widened the gap between actual customer activity and the bank's ability to detect potential fraud patterns.

Pipeline Scheduling Chaos

Modern data pipelines operate like intricate train schedules. When one job fails to start, it's similar to a train failing to depart - every subsequent connection is affected. The scheduling system typically can't distinguish between a delayed job and one that failed to start, leading to resource reservation for jobs that will never run. This creates artificial bottlenecks as the scheduler holds resources for phantom jobs while actual work waits in queue.

Resource Utilization Imbalance

Failed job initiations create paradoxical resource utilization patterns. While some systems sit idle waiting for data that will never arrive, others become overloaded trying to process backlogged work. This imbalance often manifests in unexpected ways. For instance, when Lendly's data refresh jobs failed to initiate, their systems showed deceptively low resource utilization while downstream processes accumulated increasing memory usage trying to handle partial or stale datasets.

Data Consistency Challenges

In distributed systems, data consistency depends on careful orchestration of updates across multiple services. When jobs fail to start, different parts of the system can become desynchronized. This isn't just about data being out of date - it's about different systems having conflicting views of reality.

Partial Updates

Perhaps the most insidious effect of failed jobs occurs when some jobs in a sequence start while others fail. This creates partial updates that can be harder to detect and fix than complete failures. Data consumers might receive some updated fields while others remain stale, leading to subtle inconsistencies that can persist for hours or days before discovery.

Building Predictive Data in Motion Monitoring

Forward-thinking organizations and data teams want to build a predictive monitoring system that catches job initiation issues before they cascade through the pipeline. Here’s how they can do it.

Technical Architecture

The foundation of predictive job monitoring rests on a comprehensive observability layer that spans your entire data pipeline. It should bridge multiple systems, from ETL tools to data warehouses to BI platforms. Rather than monitoring these systems in isolation, the architecture should create a unified view of data motion across the enterprise.

A robust predictive monitoring system typically consists of four key layers:

- First, a data collection layer that gathers metrics about job performance, timing, and dependencies.

- Second, an analysis layer that processes this telemetry data to identify patterns and anomalies.

- Third, a prediction layer that uses these patterns to forecast potential failures.

- Fourth, an action layer that can trigger automated responses or alert relevant teams.

Configuring Alert Thresholds

Alert threshold configuration represents a balance between early warning and alert fatigue. Rather than setting static thresholds, modern systems should employ dynamic thresholds that adjust based on historical patterns and business context.

Consider TQL's experience: their sales reporting pipeline required different thresholds during peak business hours versus overnight processing. The system learned normal variation patterns for different time periods and adjusted sensitivity accordingly. This dynamic approach significantly reduced false positives while ensuring critical issues weren't missed.

Integration with Existing Tools

Most organizations already have significant investments in monitoring and observability tools. Rather than replacing these systems, predictive job monitoring should enhance them through strategic integrations.

The key is to establish a unified view of job health across all systems. This means aggregating data from various sources - job schedulers, execution logs, resource metrics, and business KPIs - into a coherent picture of pipeline health. Integration should preserve existing workflows while adding predictive capabilities on top.

Data Pipeline Automation

Effective pipeline automation serves as the backbone of proactive job monitoring. Rather than just tracking job status, automation creates a self-healing system that can respond to potential failures before they impact business operations. Pipeline automation should also handle routine maintenance tasks that often cause job initiation failures, like resource scaling and configuration updates.

Pipeline automation is doubly powerful when combined with observability. Systems can prevent many common failure modes by continuously monitoring health and automatically adjusting based on current conditions. This might mean automatically redistributing workloads when resource constraints are detected, or temporarily routing data through backup pipelines when primary systems show signs of stress.

Continuous Learning

Predictive monitoring systems can improve over time through continuous learning. That doesn’t just mean collecting data about job failures. It’s about understanding a job failure’s root causes and business impact. Each prevented failure becomes a learning opportunity. After preventing a major incident, organizations can incorporate new patterns into their prediction models, making them even more effective at catching similar issues down the line.

Data in Motion Can Still Be Reliable – with Monitoring

The evolution of data pipeline monitoring mirrors the evolution of data architectures themselves - from static, batch-oriented systems to dynamic, continuously flowing data streams. As we've explored throughout this series, data in motion requires a fundamentally different approach to reliability and observability.

The future of data pipeline reliability lies in combining predictive monitoring, automated pipeline discovery, and intelligent threshold management into a cohesive strategy. This isn't just about catching failures faster - it's about preventing them for good. Free your data teams to focus on innovation, not firefighting.

We've traced the journey of data in motion from fundamental concepts through real-time monitoring and finally to predictive failure prevention. The next frontier lies in expanding these capabilities with artificial intelligence and machine learning to create truly self-healing data pipelines. But that's a story for another series.

Interested in seeing what end-to-end pipeline monitoring can do for your organization? Demo Pantomath today.

Read Data in Motion Part 1 here

Read Data in Motion Part 2 here

Keep Reading

.png)

December 9, 2025

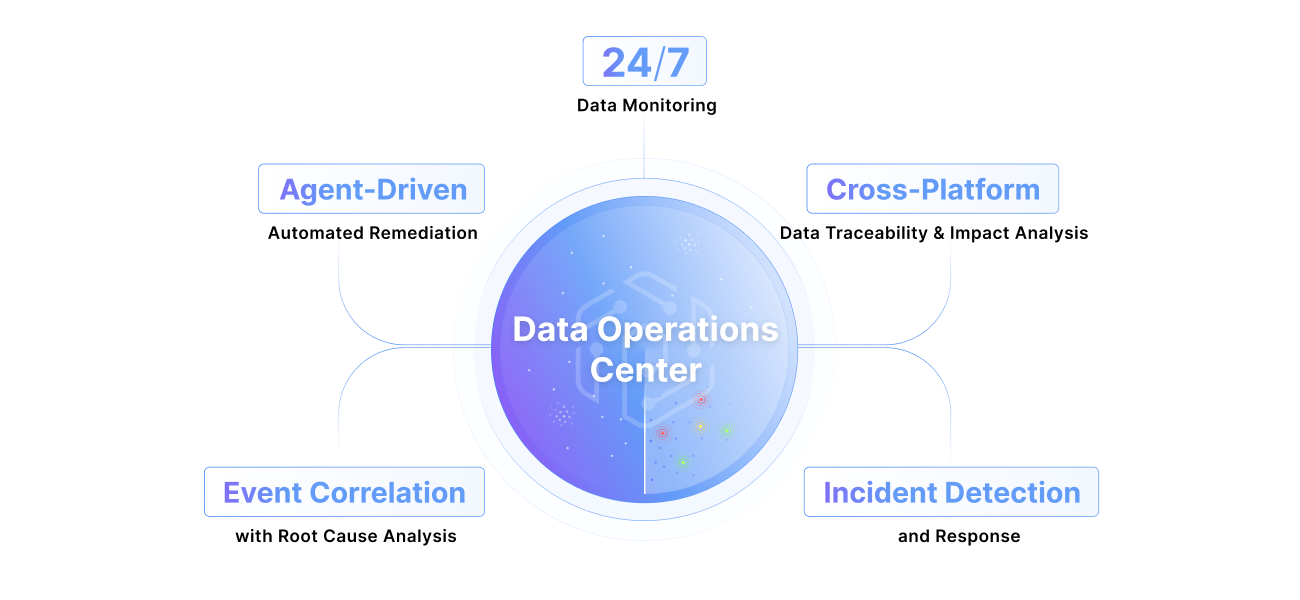

From NOC and SOC to DOC: The New Standard for Enterprise Data OperationsThe DOC isn’t a “nice-to-have,” it’s the next frontier—and it’s here. Read about the evolution from NOC to SOC to DOC, three functions enterprises cannot go without.

Read More.png)

October 28, 2025

Pantomath Achieves ISO/IEC 27001:2022 CertificationPantomath announced today that it has achieved ISO/IEC 27001:2022 certification for its Information Security Management System (ISMS).

Read More.png)

October 27, 2025

Rethinking Incident Response: How Agentic AI Transforms L1 to L3 WorkflowsDiscover how Agentic AI transforms L1–L3 incident response, automating root cause analysis and streamlining data operations across the enterprise.

Read More