.png)

Data reliability issues start earlier than you think. Learn why observability at ingestion is the foundation of trust and how proactive monitoring and automation change the game.

Why Observability at Ingestion is Crucial for Data Trust & Reliability

After years of working with enterprise data teams across industries, from healthcare systems managing patient data to financial institutions processing billions in transactions, I've observed a consistent pattern. The most devastating data observability and reliability issues don't start in your data warehouse or BI layer. They begin at ingestion.

There are two fundamental reasons why ensuring data is reliable throughout the data ingestion process from source to target continues to plague organizations, despite massive investments into the modern data stack. Let’s explore what they are, and how to overcome them.

The human challenge: Organizational silos

The biggest challenge in enterprise data isn’t technical; it’s human. Organizational silos and cultural disconnects between data producers (like ERP database teams) and data consumers (like analytics and platform teams) create breakdowns, long before any technology is involved.

I've seen this firsthand in our work with organizations like Franciscan Health, where data flows through a maze of systems, from EMRs and ERP applications to dashboards, data warehouses, and flat-file exchanges with external partners.

When no single team owned or even had visibility into the full end-to-end data pipeline, critical upstream changes would happen in isolation, blindsiding downstream teams when it was already too late.

Imagine a hypothetical ERP team, trying to optimize their system. They remove a column they believe isn’t used. Unbeknownst to them, that column powers three downstream pipelines, including one feeding an executive dashboard. The first warning sign doesn’t come until leadership demands to know why their revenue dashboard just dropped to zero. By then, it’s too late, and your ERP team has to scramble to cover for their oversights.

These issues aren’t rooted in faulty tech. They’re rooted in weak change management, incident management and a lack of cross-team collaboration. It’s a people and process problem. Technology alone can’t fix data ingestion issues. But, technology can help us build the visibility, accountability, and trust needed to bridge the gap because manually calculating impact analysis across systems is extremely challenging.

The Technical Challenge: Two Worlds Colliding

The second challenge is purely technical. There are inherent technical challenges in connecting two different worlds. These include network issues and connection failures, source system volatility, diverse data formats and structures across fundamentally different database types and platform versions, data volume and velocity, missing or incomplete data at source.

According to our research, when asked about the average time needed to resolve data pipeline issues, 90% respondents said that it takes hours, days, or even weeks. 74% rely on downstream teams to detect problems. This reactive approach is unsustainable in today's data-driven business environment.

Where Traditional Tools Fall Short

Most data teams still depend on the built-in monitoring features of their ETL tools; but those tools were built for a simpler time where one ETL job extracted, transformed and loaded data into the data warehouse to simply store and visualize. Designed to flag only job failures, they offer limited visibility and basic troubleshooting, leaving teams blind to deeper issues.

Data integration engineers and operations teams are often stuck reacting to problems, instead of preventing them. Critical observability gaps across an ELT architecture pattern allow small issues to snowball into major outages. Here are the most common gaps:

- Lack of downstream impact understanding: Data integration tools lack a holistic understanding of the downstream impact of bad data. They also don't have a guided path to resolution for their users. Your Informatica job might complete "successfully," but if it loaded corrupted data, every downstream process becomes a ticking time bomb.

- Limited monitoring scope: Data integration tools lack robust monitoring and self-healing capabilities. Data management pitfalls like data latency and not-started jobs don’t belong in their monitoring framework. They can tell you a job failed, but they can't tell you why or what to do about it, or detect runtime issues.

- Manual resolution: When issues occur, teams spend days reverse-engineering data flows to understand impact and manually coordinate fixes across multiple platforms. This manual approach doesn't scale in complex, modern data environments.

From Reactive to Proactive: A real-world transformation

Let me illustrate the evolution from traditional reactive approaches to intelligent automation through a scenario that we see playing out daily in enterprise environments.

Without Pantomath

An Informatica data integration job is running longer than expected, but the downstream Snowflake stored procedure that is triggered by a Snowflake Task starts running at its scheduled time.

The overlap and conflict between the two jobs lead to missing rows in a Snowflake table downstream. This problem has a ripple effect across several different touchpoints in the data pipeline leading to bad data in multiple Tableau reports.

Without proper observability at ingestion, this issue goes undetected until business users notice incorrect reports. By then, decisions have been made on bad data, and the troubleshooting process begins, often taking days to trace back to the root cause.

With Pantomath

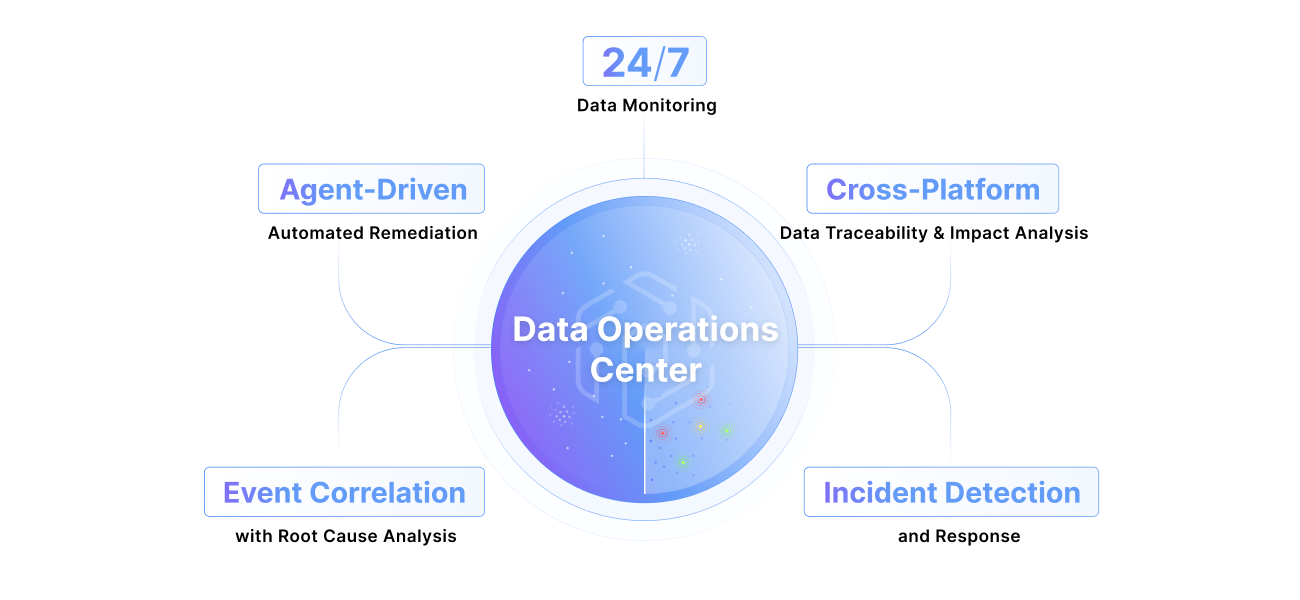

Pantomath is your Data Operations Center. Through monitoring the entire data pipeline from data producers to data consumers for both data-in-motion and data-at-rest issues, that same long-running job in Informatica is caught in real-time. An automated alert notifies the data operations team with a clear outline of the issue and the path and time to resolution.

Pantomath explains what is broken (i.e. Informatica job and child tasks), why it is broken (i.e. root-cause analysis through logs and metrics), and where the impact lies (i.e. Snowflake tables with missing data/volume issues and Tableau reports, through traces).

We're rapidly approaching a future where data pipelines don't just alert you to problems. They self-heal autonomously. With the data reliability engineering agent geared towards full-self resolution, Pantomath will automatically pause every downstream job through circuit breakers and sequentially re-run each job once the upstream job(s) have been completed. Note that no manual DAGs or event-based orchestration needs to be defined, since Pantomath's AI Agent is leveraging the data health graph and a RAG architecture to automatically detect and resolve issues in real time.

Start Right, at the Ingestion Stage

Data pipelines will always face disruptions, especially at the critical point of ingestion: the moment when external, often unpredictable data enters your ecosystem. But by prioritizing observability and automation right at the source, you change the game. You move your teams from firefighting to forward-thinking, from endless troubleshooting to true innovation.

For example, in our work with one enterprise, we saw 6-figure savings within the first few months in manual toil from a single implementation. But the real value isn't just cost savings, it's enabling teams to focus on innovation rather than firefighting.

Ingestion is where small issues are easiest to spot, quickest to fix, and least likely to spread unchecked. And as leading organizations are discovering, empowering teams with deep observability and intelligent automation at this entry point pays dividends.

This is the foundation of data infrastructure that works for you, not against you—a platform where every new dataset ingested strengthens reliability instead of introducing new risks. The future of data operations isn’t just more sophisticated monitoring: it’s a shift to intelligent, proactive systems that solve issues at the source and set the stage for genuine data-driven advantage. That future begins at the start: that’s at ingestion.

Reach out to learn more about our solution here!

Keep Reading

.png)

December 9, 2025

From NOC and SOC to DOC: The New Standard for Enterprise Data OperationsThe DOC isn’t a “nice-to-have,” it’s the next frontier—and it’s here. Read about the evolution from NOC to SOC to DOC, three functions enterprises cannot go without.

Read More.png)

October 28, 2025

Pantomath Achieves ISO/IEC 27001:2022 CertificationPantomath announced today that it has achieved ISO/IEC 27001:2022 certification for its Information Security Management System (ISMS).

Read More.png)

October 27, 2025

Rethinking Incident Response: How Agentic AI Transforms L1 to L3 WorkflowsDiscover how Agentic AI transforms L1–L3 incident response, automating root cause analysis and streamlining data operations across the enterprise.

Read More