2026 predictions for data leaders on where accountability shifts next, from AI data pipelines and data quality risk to stack consolidation and audited data products.

2026 Predictions for Data Leaders: Where Accountability Moves Next

Most industry predictions focus on what is new. New tools, new architectures, new AI capabilities. Those conversations are interesting, but they often miss the more important question facing data leaders right now: who is actually accountable when things break, costs spike, or AI decisions go wrong.

The shifts that will define 2026 are not about chasing trends. They are about how accountability moves inside large enterprises. From tools to business outcomes. From platforms to economics. And from analytics into real AI execution.

For CDOs and senior data leaders, the next year will quietly determine whether the role expands in influence or gets narrowed to reporting and governance. The difference will come down to ownership. Not ownership of models or infrastructure, but ownership of the data pipelines, quality signals, and operational trust that everything else depends on.

Here are 5 predictions we believe will shape that outcome:

- CDOs who do not control AI data pipelines will lose relevance to CTOs

- Data quality KPIs will replace model accuracy as the primary AI risk metric

- The modern data stack will shrink from 15–20 tools to fewer than 7

- Data products will become audited assets, not aspirational concepts

- More than half of enterprise analytics will bypass BI tools entirely

Let’s go further into each one.

#1 - CDOs who don’t control AI data pipelines lose relevance to CTOs

“The CDO who does not own AI data pipelines owns neither trust nor impact.”

Historically, the division of responsibility was clear. CDOs focused on analytics, reporting, and governance. CTOs owned production systems. AI collapses that boundary.

Modern AI systems depend on continuously updated features, real-time data feeds, and retraining pipelines that blur the line between analytical and operational data. When those pipelines live outside governed platforms, the CDO’s role shifts toward oversight rather than impact.

By 2026, enterprises will expect governed, real-time feature pipelines on their lakehouse platforms. AI teams that source data through side pipelines or unmanaged integrations will create an uncomfortable reality: the CDO is accountable for trust, but does not control the inputs that determine it.

The most effective CDOs are already responding by taking ownership of data readiness, reliability, and pipeline traceability across both analytical and operational flows. This does not mean owning models or application logic. It means owning the quality, freshness, and governance of the data that AI systems consume.

Common objections surface quickly. AI is seen as the domain of the CTO or a Chief AI Officer. Production systems feel outside the traditional analytics mandate. There is understandable hesitation to inherit operational risk.

But owning pipelines is not the same as owning models. The CDO’s responsibility is the fuel, not the engine. As models retrain continuously, operational data becomes strategic data. If trust cannot be guaranteed at the data layer, accountability for outcomes becomes impossible.

In that environment, influence follows control.

#2 - Data quality KPIs replace model accuracy as the #1 AI risk metric

“In AI systems, bad data is not a defect. It is a liability.”

AI conversations still revolve around model selection and accuracy metrics, but enterprise experience is already pointing elsewhere. Most AI failures do not originate in the model. They originate upstream, in stale data, broken semantics, delayed pipelines, or untraceable transformations.

As AI systems become customer-facing and decision-making becomes automated, the consequences of poor data quality become more visible and more expensive. In response, organizations are shifting investment toward data contracts, lineage, and pipeline observability, often on Snowflake or Databricks, rather than incremental model tuning.

By 2026, regulators and auditors will increasingly ask for proof of data lineage, freshness, and bias controls, not just documentation of model behavior. This places the CDO squarely in the role of AI risk owner, regardless of whether the title reflects it.

Many organizations believe they already have data quality programs. In practice, most of those programs were built for batch analytics and static reporting. They struggle in environments where data changes continuously, models retrain frequently, and multiple AI systems depend on shared upstream pipelines.

This is not a new problem, but it is a newly critical one. Poor data quality has always caused broken dashboards and delayed reports. In AI systems, it creates public failures, regulatory exposure, and reputational damage.

#3 - The modern data stack collapses from 15–20 tools to fewer than 7

“The biggest risk is not vendor lock-in. It is architectural entropy.”

For the past decade, enterprises tolerated sprawling data stacks because innovation felt worth the operational cost. That tolerance is ending. As AI investment grows, CFOs are forcing scrutiny elsewhere, and the hidden cost of integration and support is becoming impossible to ignore.

By 2026, warehouses and lakehouses will continue absorbing capabilities that once required standalone tools, including ingestion, transformation, ML workflows, governance, and activation. Platform vendors will accelerate native development and acquisitions, reducing the perceived value of fragmented best-of-breed solutions.

For CDOs, this makes vendor strategy a leadership issue, not just a technical one. Choosing the wrong platform center of gravity can lock teams into years of unnecessary complexity and cost.

Concerns about vendor lock-in are valid, but they often miss the current reality. Most enterprises are already locked in, just in a more chaotic way, spread across dozens of tools and custom integrations. At scale, integration drag, ticket volume, and operational noise outweigh marginal feature advantages.

This shift is not about ripping and replacing existing systems. It is about slowing stack growth and making deliberate, deeper bets going forward. The largest savings often come from the tools that never get added.

#4 - “Data products” stop being a philosophy and start being audited assets

“If a dataset has no owner and no cost, it has no value.”

Data products and data mesh promised ownership and accountability, but in many organizations they remained conceptual. Without economic signals, ownership was easy to avoid.

That changes as data costs become explicit.

By 2026, critical datasets will increasingly be treated like financial assets, complete with owners, SLAs, usage metrics, and visible cost. Internal chargebacks for Snowflake and Databricks usage will become common, forcing teams to think in terms of value creation rather than data volume.

Boards and executive teams will ask direct questions about which datasets actually drive revenue, efficiency, or risk reduction. Platform uptime will matter less than ROI per dataset.

Chargebacks often raise concerns about slowing teams down. In practice, they tend to expose waste rather than productivity. Low-value data becomes expensive to maintain. High-value data earns continued investment. Ownership follows incentives far more reliably than policy mandates.

#5 - More than half of enterprise analytics will bypass BI tools entirely

“In 2026, governance lives beneath the interface. BI becomes the blueprint, not the bottleneck”

BI tools are not disappearing, but their role is changing. As natural-language and agent-based interfaces mature, especially when embedded directly into governed data platforms, they will become the primary access point for many users.

Executives and operators increasingly prefer asking questions directly against governed data rather than navigating dashboards. In this model, BI tools remain essential for defining metrics, semantics, and visual standards, but they stop being the main consumption layer.

For CDOs, this shifts the source of competitive advantage. Success depends less on dashboard design and more on the strength of the semantic layer and governance beneath it.

Concerns about chaos are understandable. In practice, well-defined semantics reduce fragmentation. Instead of thousands of dashboards with subtle differences, users interact with the same governed definitions through multiple interfaces.

Governance does not disappear. It moves below the interface.

What These Predictions Have in Common

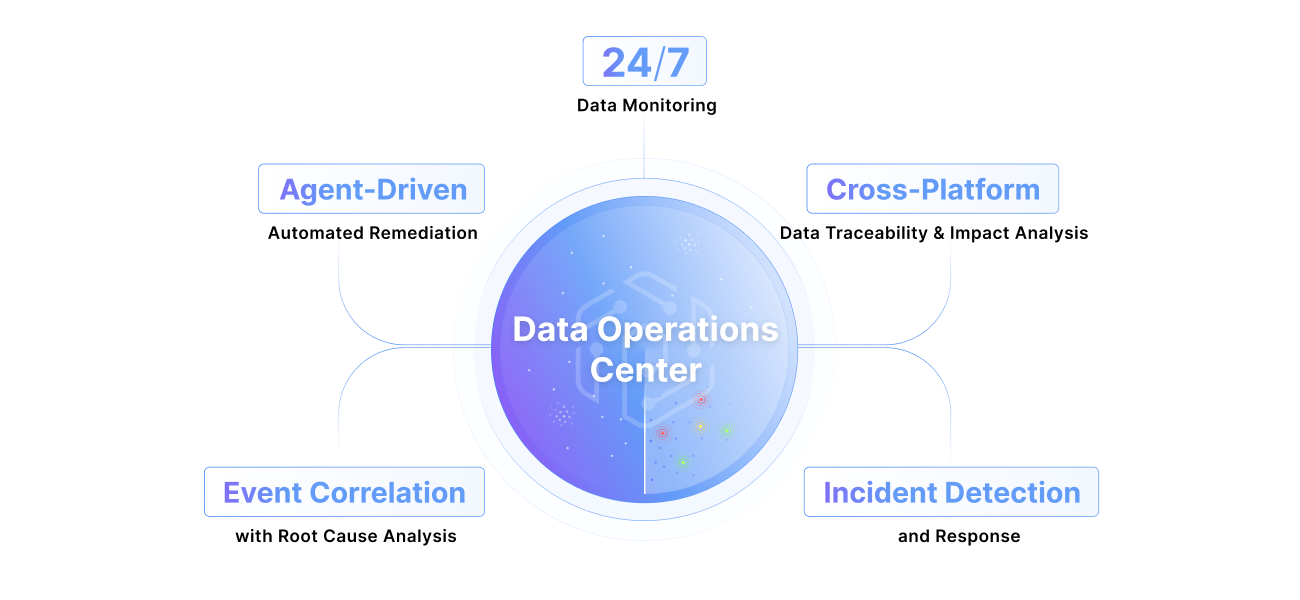

Each of these shifts points to the same underlying change. Accountability is moving upstream. Costs are becoming visible. Trust is becoming operational rather than assumed.

The organizations that succeed in 2026 will not be the ones with the most tools or the flashiest AI demos. They will be the ones that can trace issues quickly, fix problems faster, reduce noise across teams, and prove that their data reliably supports business outcomes.

For data leaders, the question is no longer whether these changes are coming. The question is whether your Data Operations model is prepared to support them.

If not, now is the right time to rethink where ownership should live and how trust is built across the data stack. Want to discuss these further with me? Reach out here.

Stay tuned for part 2 where we discuss the importance of metadata within these predictions.

Keep Reading

.png)

October 28, 2025

Pantomath Achieves ISO/IEC 27001:2022 CertificationPantomath announced today that it has achieved ISO/IEC 27001:2022 certification for its Information Security Management System (ISMS).

Read More.png)

October 27, 2025

Rethinking Incident Response: How Agentic AI Transforms L1 to L3 WorkflowsDiscover how Agentic AI transforms L1–L3 incident response, automating root cause analysis and streamlining data operations across the enterprise.

Read More

September 30, 2025

Why Data Teams Need Unified SchedulingData operations don’t fail because of tools, they fail because they’re fragmented. A unified scheduling view brings order, reliability, and confidence - see how.

Read More

.png)