Data operations don’t fail because of tools, they fail because they’re fragmented. A unified scheduling view brings order, reliability, and confidence - see how.

Why Data Teams Need Unified Scheduling

Behind every trusted number in the boardroom is a report produced using a web of scheduled jobs that rarely run in sync. Data moves through ingestion, transformation, storage, and reporting systems, each with its own native scheduler and alerts. None of them share context. The result is fragmentation. Teams are asked to deliver data that is reliable and on time, yet they are operating blind to how jobs connect across the landscape.

When a job fails or is delayed, the impact is rarely contained. Downstream processes stall, reports are inaccurate, and confidence erodes. By the time engineers piece together what happened, the business has already felt the cost.

This is not a technical nuisance - it is a fundamental gap in how data teams operate. When the business depends on data, gaps in visibility become gaps in trust.

The Cost of Fragmentation

Consider what happens when a scheduled job fails or is delayed. The immediate question is simple: what caused it and who will feel the impact? Yet answering it often requires hours of detective work. One engineer pulls logs from the warehouse, another checks refreshes in a dashboarding tool, a third scans pipeline status in an orchestration platform. Threads in Slack or Teams fill with screenshots and speculation. By the time a clear answer emerges, the business has already noticed.

This cycle repeats itself across organizations every day. Reports are late and no one knows why. Pipelines stall and no one has visibility downstream until users complain. Latency silently impacts and no one realizes the impact until it's late. Small changes ripple into unexpected failures. More and more data engineers are pulled off project work to go fix something that was built months ago. Each incident chips away at the credibility of the data team. What the business sees is not just a delay, but a lack of reliability.

The truth is that data operations are being run without the operational layer they need. Application engineering teams would never accept this - they have mature monitoring, observability, and incident management. Data teams deserve the same.

The Concept of Unified Scheduling

The idea of unified scheduling is simple. Instead of treating each scheduler as an island, bring them together into a single control plane. Not by replacing the tools, but by creating coherence across them.

With unified scheduling, there is finally one view of every job in motion. Leaders can measure reliability across the entire data landscape rather than system by system. Engineers can see upstream and downstream dependencies, understand the impact of failures or other runtime issues, and make changes with confidence. Operations teams can anticipate problems instead of reacting to them.

The shift is not only technical, it’s cultural. Unified scheduling changes the posture of the team and outages become manageable instead of chaotic. Maintenance windows are planned with precision instead of guesswork and requests from the business can be met with clarity instead of uncertainty. In short, data teams begin to operate with the rigor that the business already expects of them.

In Practice (Real-World Scenarios)

The need for unified scheduling shows up differently depending on your role.

The Reliability Engineer

When an outage occurs, the first step is always to understand the impact. Without unified scheduling, this is a slow process of toggling between consoles and piecing together status reports. With unified scheduling, the engineer can open a Gantt-style view that immediately reveals which jobs are stalled, what reports will be late, and what business impact is unfolding. What used to take hours is compressed into minutes. The engineer can move from investigation to resolution quickly, and the business receives clear, proactive, communication.

The Platform Engineer

Platform maintenance is a fact of life. Whether upgrading infrastructure or applying patches, these changes carry the risk of disrupting jobs. Without a clear view of dependencies, platform engineers are left to hope that nothing critical runs during the maintenance window. With unified scheduling, they can filter jobs that depend on their platform, identify which business processes are at risk, and communicate with stakeholders ahead of time. Maintenance becomes routine instead of disruptive, and engineers regain confidence that they can improve the platform without collateral damage.

The Data Engineer

Change requests from the business can be deceptively simple. A stakeholder asks for a key report two hours earlier each morning. Without visibility into the pipeline, this becomes guesswork. Which upstream jobs feed the report? Can those jobs complete earlier without breaking others? The risk of introducing failure is high. With unified scheduling, the engineer can trace the construction path of the report end to end. They can see which jobs need to shift and plan accordingly. The result is a confident change delivered on time, with trust reinforced rather than eroded.

The Operations Leader

For operations leaders, the start of the day is often a scramble. As a direct quote from an operations leader: “I used to wake up every morning and check my phone to see how difficult my day was going to be”. Which jobs are late or didn’t start? Which ones failed overnight? Where should the team focus first? Without unified scheduling, triage relies on scattered, duplicative, alerts and frantic messages. With Unified scheduling, leaders open a single dashboard that shows all jobs, ranked by business criticality. They can direct the team to the most important issues, prevent minor incidents from escalating, and demonstrate control to the business. The team looks organized because they finally have the visibility to be organized.

Why It Matters to Data Leaders

For executives, the details of scheduling can feel tactical. But the outcomes are not. Every missed SLA is a broken promise. Every delayed report is a dent in the perception of reliability. These are credibility issues, not technical ones.

Unified scheduling addresses them at the root, it allows leaders to make reliability measurable and repeatable. It transforms operations from reactive confusion into proactive management. It gives teams the posture of maturity that the business already assumes they have. And it sets the stage for data to be seen as a dependable partner to the organization rather than a source of friction.

Where Pantomath Fits

This is exactly the problem we built Job Pulse to solve. Job Pulse unifies scheduled jobs across platforms into one command center. It gives every role the visibility they need, from reliability engineers investigating incidents to executives protecting the credibility of the data function. It allows teams to see performance over time, understand dependencies, and focus on the pipelines that matter most.

By putting scheduling into a single frame, Job Pulse helps data teams run operations that are reliable, predictable, and measurable. It turns the concept of unified scheduling into a practical capability that teams can use today.

Data operations cannot be left fragmented. The stakes are too high, and the expectations from the business are only increasing. Unified scheduling is not just a feature. It is the next step in how modern data teams need to operate. If your team is ready to move beyond fragmented monitoring and toward unified control, set up time to speak with us here.

Keep Reading

.png)

December 9, 2025

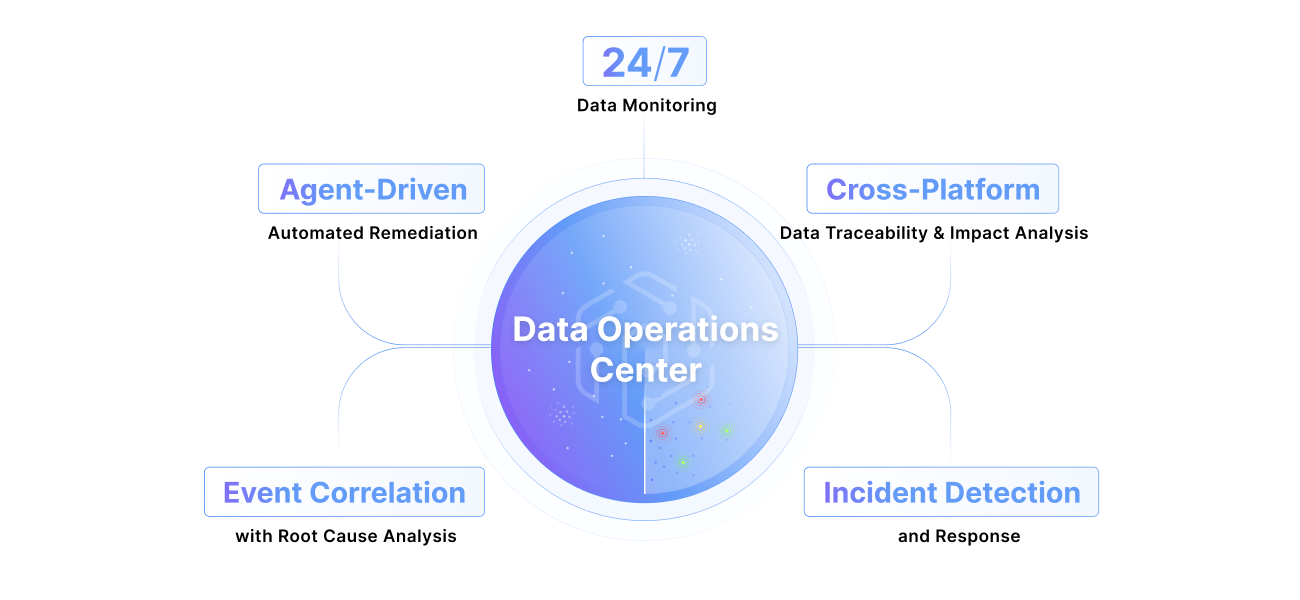

From NOC and SOC to DOC: The New Standard for Enterprise Data OperationsThe DOC isn’t a “nice-to-have,” it’s the next frontier—and it’s here. Read about the evolution from NOC to SOC to DOC, three functions enterprises cannot go without.

Read More.png)

October 28, 2025

Pantomath Achieves ISO/IEC 27001:2022 CertificationPantomath announced today that it has achieved ISO/IEC 27001:2022 certification for its Information Security Management System (ISMS).

Read More.png)

October 27, 2025

Rethinking Incident Response: How Agentic AI Transforms L1 to L3 WorkflowsDiscover how Agentic AI transforms L1–L3 incident response, automating root cause analysis and streamlining data operations across the enterprise.

Read More